🌐 A New Frontier: When Agents Become the Interface

On July 17, OpenAI dropped a bold signal: not GPT-5 (yet), but something equally disruptive—ChatGPT Agents, a general-purpose AI assistant that can browse, research, shop, plan, and create.

At first glance, it felt premature. Demos looked polished, but early users complained of slow speeds and clunky executions. Still, make no mistake:

More than a tool, it’s a strategic bid to become the default entry point to the internet.

As agents become the new default entry point to services, search engines, and transactions, we’re seeing a power shift from GUIs to LLM-fueled APIs.

ChatGPT now handles 2.5 billion prompts per day—roughly 18% of Google’s annual search volume.

The big question isn’t just who has the best model—it’s also how effectively the agent can interact with the right APIs to get things done reliably.

But building these agents isn’t just about giving them language skills.

Under the hood, they need structure—an execution environment, tool access, and decision-making logic. That’s where architecture matters.

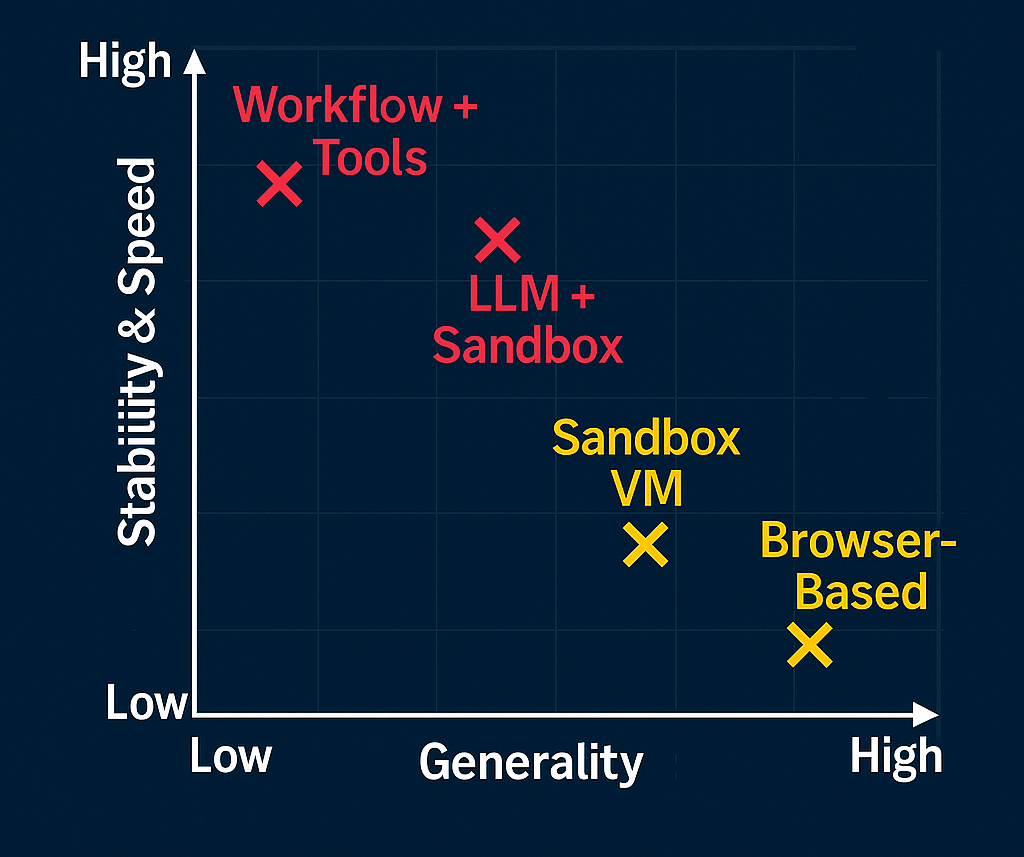

So how are today’s most advanced AI agents actually built? Let’s break down the four dominant approaches shaping this landscape:

🧠 The 4 Core Technical Approaches to General AI Agents

This fusion represents one of the four dominant technical paradigms emerging in 2025:

| Approach | Core Mechanism | Strengths | Weaknesses |

|---|---|---|---|

| Browser-Based | Vision models simulate human browsing, clicking, and reading | Universal; can theoretically access any online content | Very slow, high token usage, fragile to UI changes |

| Sandbox VM | AI runs tasks in a virtual OS (e.g. Linux) to simulate a real computer | Secure, complex logic possible, handles code, file ops | Slow and limited in authenticating with real services |

| LLM + Sandbox | Pre-designed closed environments + LLMs orchestrate code/tool execution | Fast, reliable for specific workflows; low cost | Lacks flexibility, limited to predefined toolsets |

| Workflow + Tools | Pre-integrated APIs and automation flows, orchestrated by lightweight LLM routing | Extremely fast, production-ready, high delivery success | Narrow scope; doesn’t scale to novel or open-ended tasks |

🧪 Examples & Use Cases

| Architecture | Example Platform | Typical Use Cases |

|---|---|---|

| Browser-Based | OpenAI ChatGPT Agent (Operator + Deep Research) | Researching across websites, planning trips, buying products, summarizing web articles. |

| Sandbox VM | Manus | Secure coding, report generation, file ops, local compute tasks. |

| LLM + Sandbox | Genspark | Presentation builders, email writers, LinkedIn/GitHub automation, database interactions. |

| Workflow + Tools | Pokee, Zapier, Unipath, n8n | CRM updates, onboarding automation, API orchestration, internal workflows. |

📉 Trade-Offs: Why “General” ≠ “Best”

Just because something is more general doesn't mean it's better.

- Browser-based agents feel like the “holy grail” (they can do everything), but they’re slow and fragile.

- Sandbox-based agents are more secure and can handle complex workflows—but struggle with real-time internet tasks.

- LLM + sandbox solutions offer a neat balance of speed and control.

- And workflow + tool agents? They’re fast, cheap, reliable—but highly specific in what they can do.

🛠️ The Rise of Workflow-First AI

What’s surprising is how quickly the market is leaning into the workflow + tool approach. Platforms like Zapier, Pokee, n8n, and even internal tools at OpenAI are embracing modular agents—ones that don’t try to be everything, but instead do one thing very well, fast.

That’s not a compromise. It’s a strategy.

We're seeing a future where:

- Personal agents are made of modular, pluggable services.

- Corporate agents are built for reliability, not just “wow factor.”

- The most useful agents are the most boring—predictable, testable, scalable.

🔮 What Happens Next: Agent-to-Agent might become the New SEO

As AI agents become the default interface to the internet:

- Website traffic will drop—humans won’t be the ones clicking.

- Agents will query, decide, and execute—sometimes without human input.

- Companies like Google (Gemini A2A), OpenAI, and Anthropic are all building toward Agent-to-Agent (A2A) protocols to own this new gateway.

This means a few things:

- Ads won’t be placed on pages, but inside agent workflows.

- APIs will become the new product surfaces.

- Creators and SaaS providers will be paid per use, not per view.

In this world, agents don’t just augment users—they become the users.

🧠 Choosing the Right API: The Next UX Layer

The big question isn’t just who has the best model—it’s also how effectively the agent can interact with the right APIs to get things done reliably.

But with so many tools offering the same API functionality—like scheduling meetings, transcribing audio, or querying a database—how will agents decide which one to use?

This introduces a new kind of UX problem: automated API selection. And it's shaping up to be the next competitive frontier.

Here’s how this might play out:

- Reputation & Ranking: APIs may be scored based on performance, uptime, latency, accuracy, or even user feedback—just like SEO for websites.

- Agent Protocols (A2A): API providers will need to follow emerging agent-to-agent (A2A) protocols, making themselves “agent-compatible” through clean interfaces and metadata.

- Marketplaces & Bidding: Agents might select APIs from curated marketplaces. In the future, APIs could even bid for default placement—just like sponsored results in search engines.

- User Preferences: Agents could choose APIs based on the user’s prior usage or organizational policy—“this team prefers Slack, not Teams.”

- Fallback Systems: To maximize reliability, agents may use redundant APIs as backups in case of failure.

In short: the agent becomes the new operating system. APIs become the new apps.

This reshapes the future of product design. SaaS providers won’t just need good UI—they’ll need great APIs, discoverable, reliable, and agent-friendly.

✨ Final Thought

A general AI agent is like a trusted family doctor—someone who knows your context, performs a quick check-up, and then refers you to the right specialist when needed. It doesn't need to do everything. It needs to know enough to coordinate everything.

In this future, Agent-to-Agent (A2A) protocols become essential.

Agents will no longer work in isolation. They’ll collaborate, negotiate, and delegate across domains—handling everything from emails to engineering to ecommerce. That’s the real internet of agents.

We’re not just building tools.

We’re building a new layer of coordination—between humans, machines, and everything in between.